How do computers see gender?

1 or 0

M or F

In computing, the term Boolean means a result that can only have one of two possible values.

Think: TRUE/FALSE, YES/NO, ON/OFF

That’s how the majority of computer systems have been developed to categorize gender—and it’s been that way since the 1950s.

Why? Because as far as data goes, 1s and 0s are considered simpler, more efficient and take up less space1.

Automatic Gender Recognition (AGR) is a subfield of facial recognition that aims to algorithmically identify the gender of individuals from photographs or videos.

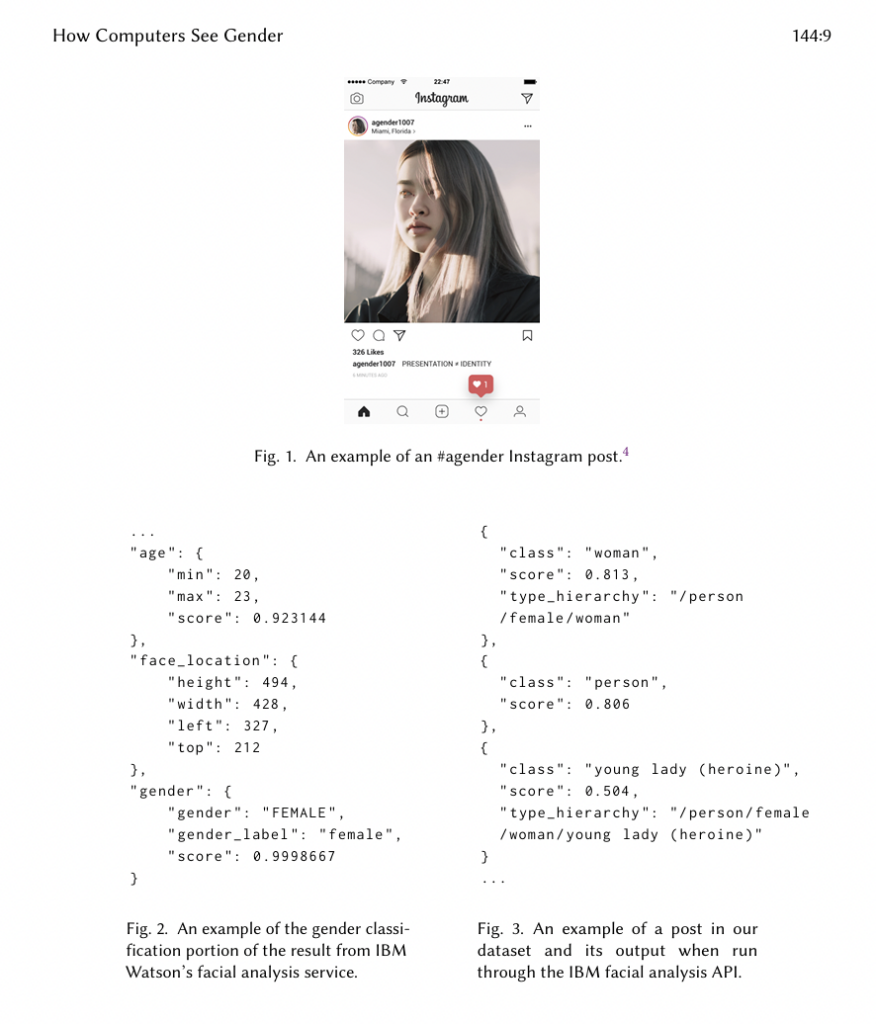

In this example, different image labeling services classify a post of an agender person in ways that misgender them and follow cisnormative structures2.

How would data like this inform the ads this person gets?

How would it inform someone using a screen reader?

How would it be used to scan them in airport security?

How about sorting and filtering systems in hiring?

Or in healthcare?

As technology advances, trans-inclusionary measures are critical.

When considering designing or implementing technologies like AGR… 3

Ask: do we even need to know the person’s gender? Increased surveillance of trans people makes widely adopting these technologies a dangerous risk whether indirectly (perpetuating bias) or directly (restricting access to services).

If you must know, use self-disclosed data. Indicators exist – like pronouns, gender identification, and hashtag use – that allow for the technologies to be non-binary, separate from physiological traits, and change over time.

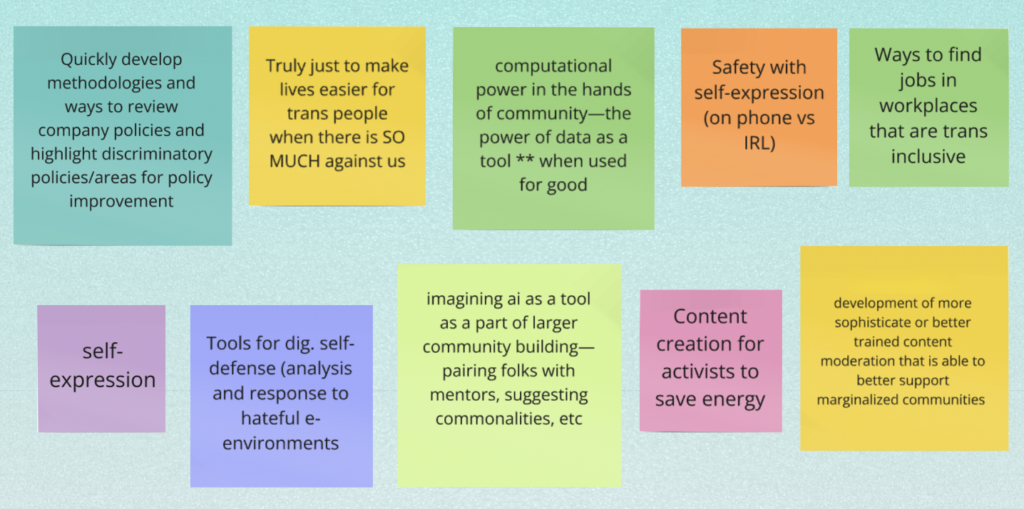

Here are some examples of positive tech applications (specifically AI) from our Trans Day of Remembrance event last November, led by Blair Attard-Frost, a researcher on AI governance through a trans lens 4.

Today is Trans Day of Visibility.

With *everything* going on that affects the rights and safety of trans people, it’s more important than ever to look critically at how technology is designed and implemented.

- “When Binary Code Won’t Accommodate Nonbinary People.” Slate, 2019. ↩︎

- Scheuerman, M. K., Paul, J. M., & Brubaker, J. R. (2019). How Computers See Gender: An Evaluation of Gender Classification in Commercial Facial Analysis Services. Proceedings of the ACM on Human-Computer Interaction, 3 (CSCW), Article 144. ↩︎

- Keyes, O. (2018). The Misgendering Machines: Trans/HCI Implications of Automatic Gender Recognition. Proceedings of the ACM on Human-Computer Interaction, 2(CSCW), Article 88. ↩︎

- Attard-Frost, B. (2024, November 18). AI Ethics & Trans Resistance [Presentation]. ↩︎

Other Great Reads

-

Driving Digital Futures with Canada Learning Code

In collaboration with: Canada Learning Code is excited to announce the continuation of our collaboration with Microsoft Elevate, a new Microsof...

-

Canada Learning Code merges with Ampere to expand digital literacy for all

Canada Learning Code programs and partnerships will remain active with new leadership under Ampere CEO Ryan Oliver. For Immediate Release Li...

-

Smarter Not Harder: How to Use AI for Career Advancement

It’s all about working smarter, not harder. And the smartest tool in your shed right now is AI. Sure, AI has shaken up the job market, but why no...